Recent News

- Ms M Krishna Kumari’s Innovative Approach to Adaptive NDN Caching September 25, 2025

Assistant Professor, Dr M Krishna Kumari, Assistant Professor, Department of Computer Science and Engineering, SRM University AP, has published her paper “Adaptive NDN Caching: Leveraging Dynamic Behaviour for Enhanced Efficiency” in the Q1 Journal of Network and Computer Applications with an impact factor of 8.0. The paper reimagines the internet using Named Data Networking

Assistant Professor, Dr M Krishna Kumari, Assistant Professor, Department of Computer Science and Engineering, SRM University AP, has published her paper “Adaptive NDN Caching: Leveraging Dynamic Behaviour for Enhanced Efficiency” in the Q1 Journal of Network and Computer Applications with an impact factor of 8.0. The paper reimagines the internet using Named Data Networking

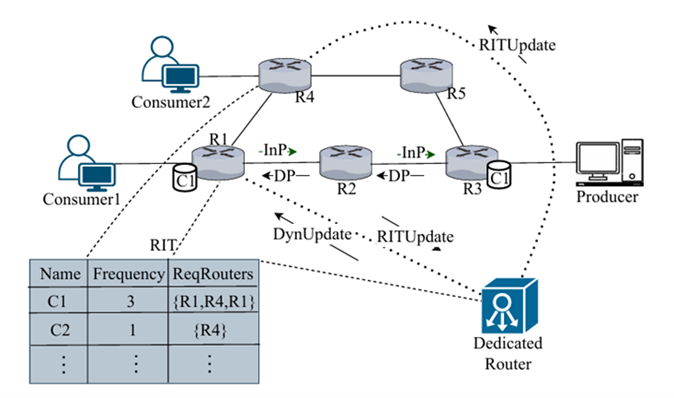

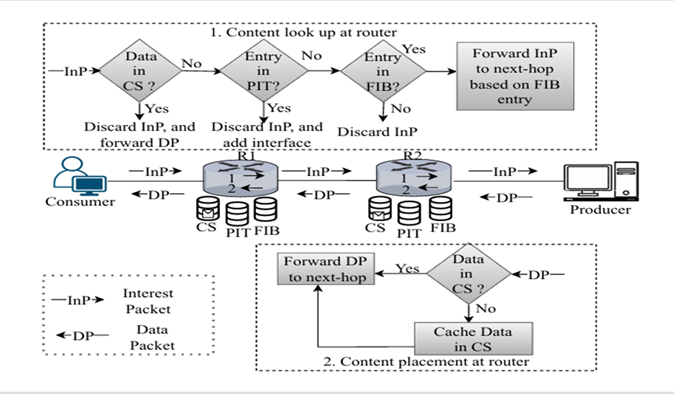

(NDN), which shifts the focus from location to data-centric communication. The study introduces an adaptive caching strategy that will lead to faster responses, smoother access, and fewer delays, even when tested on real-world topologies.Abstract of the Research:

The publication “Adaptive NDN Caching: Leveraging Dynamic Behaviour for Enhanced Efficiency” proposes a dynamic caching strategy for Named Data Networking (NDN), a next- generation Internet architecture that shifts from host-based to data-centric communication. The approach continuously adapts to real-time content demand by monitoring request patterns and intelligently placing frequently accessed data closer to end users. This adaptive mechanism enhances efficiency, reduces delays, and improves overall network performance when tested on real-world topologies.

Layperson’s Explanation:

The Internet today focuses on where data comes from (IP addresses), but modern users care about what the data is. Applications such as video streaming, online education, AI, and IoT require data instantly, regardless of its source. NDN reimagines the Internet by focusing on data itself. The caching strategy ensures that routers learn which content is most frequently requested and bring it closer to the users. This means faster responses, smoother access, and less congestion, even during heavy network load.

Practical Implementation and Social Implications:Traditional TCP/IP struggles with scalability, congestion, and security. In contrast, NDN is a

future Internet architecture designed for delay-sensitive and data-driven environments. This is

vital because:- In AI and IoT, even a fraction of delay can disrupt real-time processing.

- In VANETs (Connected Vehicular Networks), delays of milliseconds can mean serious

danger for safety-critical applications. - In tactical and mission-critical communications, reliability and speed can determine

mission success.

The caching innovation strengthens NDN’s role in delivering secure, delay-sensitive, and efficient communication for these emerging domains, while also reducing congestion and making networks more sustainable.

Collaborations:

This research is a collaboration between the Department of Computer Science and Engineering, SRM University-AP, Amaravati, and the Department of Computer Science and Engineering, Indian Institute of Technology (ISM, Dhanbad), in collaboration with Dr. Nikhil Tripathi (IIT Dhanbad).

Future Research Plans:

Future directions include extending adaptive caching to vehicular networks (VANETs), tactical communications, and other mission-critical systems, with a focus on AI-driven predictive caching for real-time applications. The goal is to enable networks to anticipate demand, ensure secure data delivery, and minimize delays, which

Photographs related to the research:

Continue reading → - Transforming IoT Performance: Deep Learning in the Fog–Cloud Continuum September 24, 2025

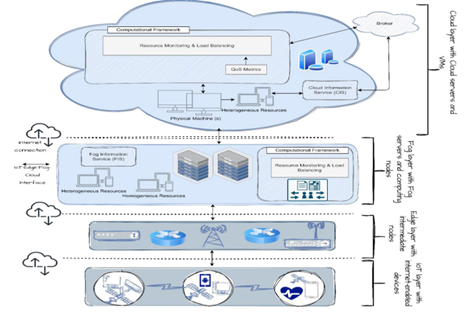

In the paper titled “Deep Learning-centric Task Offloading in IoT-Fog-Cloud Continuum: A State-of-the-Art Review, Open Research Issues and Future Directions,” by Dr Kshira Sagar Sahoo, Assistant Professor, Department of Computer Science and Engineering, and collaborators, the role of machine learning and deep learning in optimising IoT systems is comprehensively reviewed. Published in IEEE Access (2025), the study examines how intelligent task offloading and resource allocation across the fog–cloud continuum can address challenges of latency, bandwidth, and privacy. The article highlights key open issues and future directions, offering insights for developing faster, more reliable, and secure IoT applications in domains such as healthcare, smart cities, autonomous vehicles, and industrial IoT.

Abstract

The rapid growth of IoT and real-time applications has created massive volumes of data, which are traditionally processed in cloud-centric systems. This approach often suffers from high latency, bandwidth limitations, and privacy risks. Fog computing, by bringing computation closer to IoT devices, offers a promising solution. Our study provides a comprehensive review of task offloading and resource allocation in fog–cloud continuum, with a focus on machine learning and deep learning–based approaches.

Explanation in Layperson’s Terms

With billions of smart devices (like wearables, sensors, and cameras) generating data every second, sending everything to the cloud for processing can cause delays and strain the internet. Imagine if your smartwatch had to send your heartbeat data across the globe before alerting you of a health issue—that delay could be dangerous. Our research looks at how to use fog–cloud continuum, where nearby devices like routers or gateways help with computation instead of sending everything to the cloud.

Practical Implementation

Findings from this survey can help design smarter IoT systems where tasks are offloaded efficiently to nearby fog or edge devices, reducing latency and improving reliability. Key applications include:

- Healthcare monitoring (real-time alerts)

- Smart cities (traffic management, surveillance)

- Autonomous vehicles (low-latency decision-making)

- Industrial IoT (automation, predictive maintenance)

Social Impact

- Faster decision-making: Improves user safety and experience by cutting delays.

- Cost efficiency: Reduces operational costs by lowering dependence on cloud-only processing.

- Data privacy: Sensitive data can be processed closer to the source, enhancing security.

Collaborations

This research is a joint collaboration between:

- University of Saskatchewan, Saskatoon, SK, Canada

- GITAM Deemed to be University, Visakhapatnam, India

- SRM University-AP

Future Research Plans

- Developing lightweight, explainable AI models for task offloading on constrained IoT devices.

- Extending research into cybersecurity in IoT–Fog–Cloud systems, particularly DDoS detection and mitigation.

Continue reading →