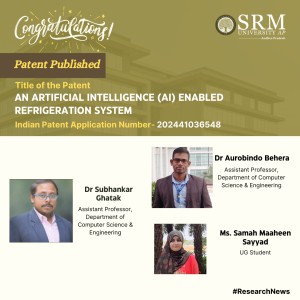

CSE Academic Innovators File Patent for AI-Powered Refrigeration System

In a significant development for the field of artificial intelligence and sustainable technologies, Dr Subhankar Ghatak, Dr Aurobindo Behera, Assistant Professor, and Ms Samah Maaheen Sayyad, an undergraduate student from the Department of Computer Science and Engineering, have collectively filed a patent for an “Artificial Intelligence (AI) Enabled Refrigeration System.” The patent, bearing the Application Number 202441036548, has been officially published in the Patent Office Journal, marking a milestone in their academic and research careers.

In a significant development for the field of artificial intelligence and sustainable technologies, Dr Subhankar Ghatak, Dr Aurobindo Behera, Assistant Professor, and Ms Samah Maaheen Sayyad, an undergraduate student from the Department of Computer Science and Engineering, have collectively filed a patent for an “Artificial Intelligence (AI) Enabled Refrigeration System.” The patent, bearing the Application Number 202441036548, has been officially published in the Patent Office Journal, marking a milestone in their academic and research careers.

This innovative refrigeration system promises to enhance efficiency and reduce energy consumption, potentially revolutionising the way we preserve food and other perishables. The team’s dedication to integrating AI into practical applications is a testament to their commitment to advancing technology for the betterment of society. The academic community and industry experts alike are eagerly anticipating further details on the implementation and impact of this patented technology.

Abstract

The invention is an advanced smart and AI-enabled refrigerator that seamlessly integrates device and software components. Key features include automatic quantity detection, a reminder system, a spoiler alert system, an inbuilt voice system, an inbuilt barcode scanner, an emotion detection system, and a personalised recipe recommendation system based on user preferences, weather conditions, season, location, and precise quantity measurements.

Research in Layperson’s Terms

The invention represents a groundbreaking improvement in traditional refrigerators, providing a new and enriched user experience through AI integration. It addresses food management, user interaction, and personalised recipe recommendations, incorporating user preferences, weather considerations, seasonal variations, location-specific nuances, and accurate quantity measurements.

Practical implementation and the social implications associated with it

The practical implementation of the “AN ARTIFICIAL INTELLIGENCE (AI) ENABLED REFRIGERATION SYSTEM ” involves the seamless integration of advanced hardware and sophisticated AI algorithms to create an intelligent and user-friendly refrigerator. The following steps outline the practical implementation:

Hardware Integration:

Sensors: Install advanced sensors, including thermistors for temperature, humidity sensors, barcode scanners, ultrasonic quantity measurement sensors, cameras, spoilage identification sensors, level sensors, defrost sensors, and weight sensors within the refrigerator compartments.

Voice and Emotion Detection Modules: Incorporate a microphone and speaker system for voice interaction and integrate cameras and emotion analysis algorithms for facial recognition and emotion detection.

Connectivity Components: Equip the refrigerator with Wi-Fi or Bluetooth modules to enable seamless data transfer and communication with other smart devices.

Processor and Memory: Utilize a powerful processor and ample memory to support AI algorithms, data processing, and smooth operation.

Display Panel: Implement an LED or touchscreen display for user interaction, providing real-time information and control over the refrigerator’s functionalities.

Software Development:

AI Algorithms: Develop and integrate AI algorithms for automatic quantity detection using computer vision, sentiment analysis for emotion detection, and collaborative filtering for personalised recipe recommendations.

Natural Language Processing (NLP): Implement NLP algorithms to enable the inbuilt voice system to understand and respond to user commands effectively.

Image Recognition Software: Utilize image recognition software to accurately read barcodes and analyse visual data from the integrated cameras.

Connectivity Software: Develop software protocols to ensure reliable wireless communication between the refrigerator and other devices or cloud services.

User Interface Software: Design a user-friendly interface for the display panel, allowing users to interact with and manage refrigerator contents easily.

Social Implications:

The “AI Enabled Refrigeration System” invention has several profound social implications:

1. Reduction in Food Wastage: The automatic quantity detection, reminder system, and spoilage alert system significantly reduce food wastage by ensuring that users are alerted about unused items and potential spoilage. This contributes to more efficient food management and a reduction in household food waste, addressing a critical global issue.

2. Enhanced Food Safety and Health: By providing real-time alerts about food spoilage and precise quantity measurements, the invention ensures that users consume fresh and safe food. This minimizes health risks associated with consuming spoiled food and promotes overall well-being.

3. Personalized Dietary Support: The personalized recipe recommendation system caters to individual dietary preferences and requirements, promoting healthier eating habits. By suggesting recipes based on user preferences, weather conditions, seasonality, and location, the system encourages balanced and nutritious meal planning.

4. Convenience and Efficiency: The inbuilt voice system, emotion detection, and intuitive user interface enhance the convenience and efficiency of managing refrigerator contents. Users can easily access information, receive reminders, and interact with the refrigerator, making food storage and preparation more streamlined.

5. Technological Advancements: The integration of advanced AI technologies in everyday appliances like refrigerators represents a significant step forward in smart home innovation. This can drive further advancements in the field, encouraging the development of more intelligent and interconnected household devices.

6. Environmental Impact: By promoting efficient food management and reducing wastage, the invention indirectly contributes to environmental sustainability. Less food waste translates to lower carbon footprints and reduced strain on food production resources, aligning with global efforts to combat climate change.

Overall, the “AI Enabled Refrigeration System” invention not only offers practical benefits in terms of food management and user convenience but also holds significant social implications by promoting health, reducing waste, and advancing technological innovation in household appliances.

Future Research Plans

Building on the innovative foundation of the “AN ARTIFICIAL INTELLIGENCE (AI) ENABLED REFRIGERATION SYSTEM, ” future research plans involve enhancing the AI algorithms for even greater accuracy in food quantity detection, spoilage prediction and personalised recipe recommendations. This includes exploring more advanced machine learning techniques and incorporating real-time feedback mechanisms to continuously refine the system’s performance. Additionally, research will focus on integrating the refrigerator with broader smart home ecosystems, allowing for seamless interaction with other smart appliances and IoT devices to create a fully connected kitchen experience. Investigations into more sustainable and energy-efficient sensor technologies will also be pursued to further reduce the environmental footprint of the device. Finally, extensive user studies will be conducted to gather feedback and insights, ensuring that the next iterations of the refrigerator are even more aligned with consumer needs and preferences, ultimately driving widespread adoption and maximising the social benefits of this technology.

Pictures Related to the Research

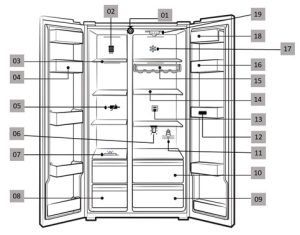

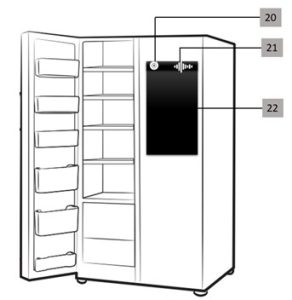

Fig 1: Schematic Arrangement of various Components for adequate operation of the proposed scheme

Fig 2: Schematic Arrangement of various Components for user interaction

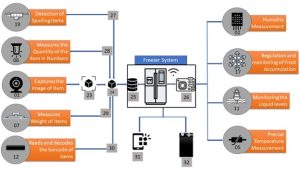

Fig 3: Schematic representation of working of various components in the freezer system

• Pointer Number-27: Spoilage Detection Sensor (19) detects the item that is being spoiled and maps to particular item for alerting the user with the help of the capturing Device (01) and the info associated with that particular item like Expiry date etc.

• Pointer Number-28: The Ultra Sonic Quantity Measurement Sensor (06) senses the quantity of the ITEM “x” (24), and the camera (01) is used to identify what is ITEM “x” through (23).

• Pointer Number-29: Weight Sensor (07), using newly captured item ITEM “x” (24) by capturing device (01), identifies the weight of that item by subtracting the weight obtained after the addition of that item with the initial holding by the cabinet and attaching the value with corresponding ITEM “x” (24).

• Pointer Number-30: Barcode Scanner (12) scans the Barcode associated with the item and maps the corresponding information with that particular item with the help of the capturing device (01).

• Port Number-31: Mobile Application.

- Published in CSE NEWS, Departmental News, News, Research News

A System for Visually Impaired Navigation

A dedicated team of researchers and professors have developed an innovative patent titled “System and a Method for Assisting Visually Impaired Individuals” that uses cutting-edge technology to significantly improve the navigation experience for visually impaired individuals, fostering greater independence and safety.

The team, comprising Dr Subhankar Ghatak and Dr Aurobindo Behera, Assistant Professors from the Department of Computer Science and Engineering, and students Ms Samah Maaheen Sayyad, Mr Chinneboena Venkat Tharun, and Ms Rishitha Chowdary Gunnam, has designed a system that transforms real-time visual data into vocals via a mobile app. It will utilise wearable cameras, cloud processing, computer vision, and deep learning algorithms. Their solution captures visual information and processes it on the cloud, delivering relevant auditory prompts to users.

Abstract

This patent proposes a novel solution entitled, “System and a method for assisting visually impaired individuals aimed at easing navigation for visually impaired individuals. It integrates cloud technology, computer vision algorithms, and Deep Learning Algorithms to convert real-time visual data into vocal cues delivered through a mobile app. The system

employs wearable cameras to capture visual information, processes it on the cloud, and deliver relevant auditory prompts to aid navigation, enhancing spatial awareness and safety for visually impaired users.

Practical implementation/Social implications of the research

The practical implementation of our research involves several key components. Firstly, we need to develop or optimise wearable camera devices that are comfortable and subtle for visually impaired individuals to wear. These cameras should be capable of capturing high-quality real-time visual data. Secondly, we require a robust cloud infrastructure capable of processing this data quickly and efficiently using advanced computer vision algorithms and Deep Learning Algorithms. Lastly, we need to design and develop a user-friendly mobile application that delivers the processed visual information as vocal cues in real-time. This application should be intuitive, customisable, and accessible to visually impaired users.

The social implications of implementing this research are significant. By providing visually impaired individuals with a reliable and efficient navigation aid, we can greatly enhance their independence and quality of life. Navigating city environments can be challenging and hazardous for the visually impaired, leading to increased dependency and reduced mobility. Our solution aims to mitigate these challenges by empowering users to navigate confidently and autonomously. This fosters a more inclusive society where individuals with visual impairments can participate actively in urban mobility, employment, and social activities.

In the future, we plan to further enhance and refine our technology to better serve the needs of visually impaired individuals. This includes improving the accuracy and reliability of object recognition and scene understanding algorithms to provide more detailed and contextually relevant vocal cues. Additionally, we aim to explore novel sensor technologies and integration methods to expand the capabilities of our system, such as incorporating haptic feedback for enhanced spatial awareness.

Furthermore, we intend to conduct extensive user testing and feedback sessions to iteratively improve the usability and effectiveness of our solution. This user-centric approach will ensure that our technology meets the diverse needs and preferences of visually impaired users in various real-world scenarios.

Moreover, we are committed to collaborating with stakeholders, including advocacy groups, healthcare professionals, and technology companies, to promote the adoption and dissemination of our technology on a larger scale. By fostering partnerships and engaging with the community, we can maximize the positive impact of our research on the lives of visually impaired individuals worldwide.

- Published in CSE NEWS, Departmental News, News, Research News

Navigating the Future: Advancements in NLP for Enhanced Healthcare

In a significant contribution to the intersection of technology and healthcare, Dr V M Manikandan, Assistant Professor in the Department of Computer Science and Engineering along with a team of dedicated undergraduate students, has co-authored a pivotal book chapter. The chapter, titled “Advancements and Challenges of Using Natural Language Processing in the Healthcare Sector,” has been published in the insightful book “Digital Transformation in Healthcare 5.0.”

In a significant contribution to the intersection of technology and healthcare, Dr V M Manikandan, Assistant Professor in the Department of Computer Science and Engineering along with a team of dedicated undergraduate students, has co-authored a pivotal book chapter. The chapter, titled “Advancements and Challenges of Using Natural Language Processing in the Healthcare Sector,” has been published in the insightful book “Digital Transformation in Healthcare 5.0.”

The collaborative effort by Dr Manikandan, Mr Shasank Kamineni, Ms Meghana Tummala, Ms Sai Yasheswini Kandimalla, and Mr Tejodbhav Koduru delves into the innovative applications and potential hurdles of implementing natural language processing (NLP) technologies in healthcare. Their work highlights the transformative power of NLP in analysing vast amounts of unstructured clinical data, thereby enhancing patient care and medical research.

This academic achievement showcases the expertise and commitment of the faculty and students and underscores the institution’s role in driving forward the digital revolution in healthcare. The chapter is expected to serve as a valuable resource for researchers, practitioners, and policymakers interested in developing smarter, more efficient healthcare systems.

Introduction of the Book Chapter

“Digital Transformation in Healthcare 5.0: IoT, AI, and Digital Twin” delves into how advanced technologies like IoT, AI, and digital twins are reshaping healthcare. It provides a comprehensive look at the integration challenges and technological advancements aiming to modernise medical practices. The chapter “Advancements and Challenges of Using Natural Language Processing in the Healthcare Sector” specifically explores how NLP processes vast data in healthcare to transform it into actionable insights, enhancing efficiency and patient care while highlighting the implementation challenges of these technologies. This book is crucial for healthcare and technology professionals interested in the future of digitally enhanced healthcare.

Significance of the Book Chapter

The chapter “Advancements and Challenges of Using Natural Language Processing in the Healthcare Sector” is significant because it encapsulates my interest and expertise in harnessing NLP to enhance healthcare operations. It showcases the potential of technology in transforming healthcare data into valuable, actionable insights, directly aligning with my focus on improving patient outcomes through technological innovation.

- Published in Computer Science News, CSE NEWS, Departmental News, News, Research News

Exploring the Exciting Potential of 6G Networking

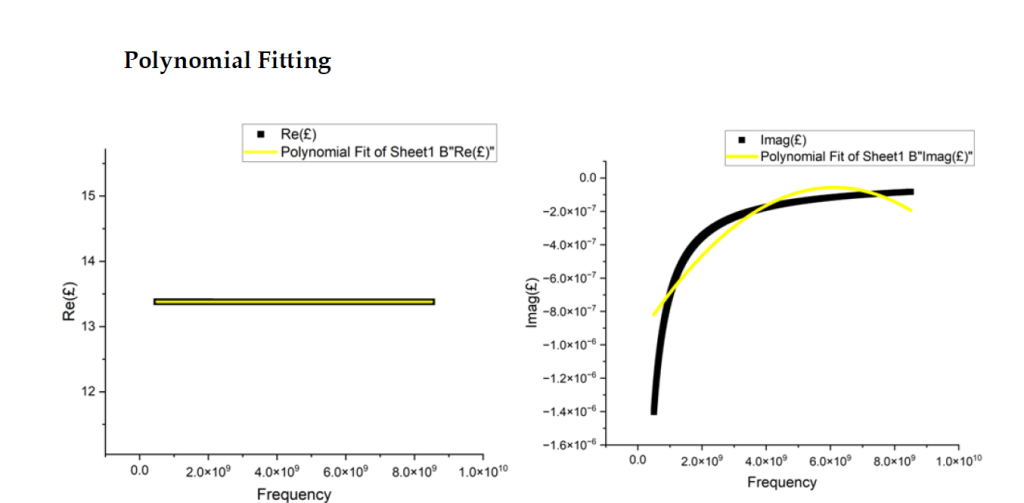

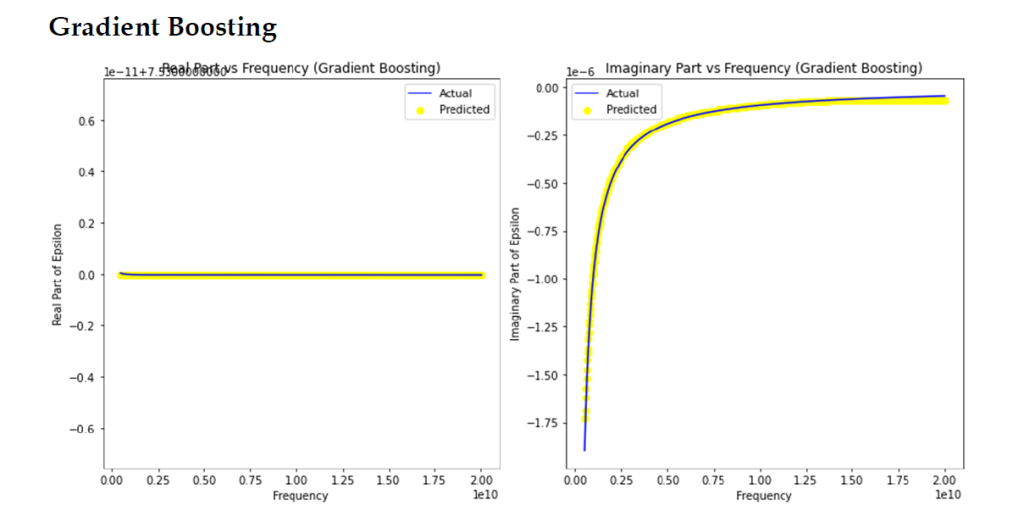

The Department of Computer Science and Engineering is proud to announce the acceptance of the book chapter titled, Dielectric Characterization of Ovine Heart Tissues at Terahertz Frequencies via Machine Learning: A Use Case for in-vivo Wireless Nano-Communication in the book, “Edge-Enabled 6G Networking: Foundations, Technologies, and Applications.” The book chapter by Dr Manjula R and her students, Ms NSK Sarayu, Ms N Sai Sruthi, Ms D Samaya, and Mr K Tarun Teja from the department caters to UG/PG and PhD students, educational institutions, and medical healthcare sectors. Dr Manjula’s research doesn’t just underscore the significance of understanding the dielectric properties of heart tissues but also highlights the transformative potential of machine learning in predicting, diagnosing and offering therapeutic interventions equipped with real-time monitoring capabilities. The research also lays the groundwork for future advancements in this field, facilitating the development of more efficient and reliable in-vivo sensing technologies.

Abstract of the Book Chapter:

A new generation of sensing, processing, and communicating devices at the size of a few cubic micrometers are made possible by nanotechnology. Such tiny devices will transform healthcare applications and open up new possibilities for in-body settings. A thorough understanding of the in-vivo channel characteristics is essential to achieve efficient communication between the nanonodes floating in the circulatory system (here, it is the heart) and the gateway devices fixed in the skin. This entails one to have accurate knowledge on the dielectric properties (permittivity and conductivity) of cardiac tissues in terahertz band (0.1 to 10 THz). This research examines the strength of the machine learning models in accurate calculation of the dielectric properties of the cardiac tissues. Initially, we generate the data using 3-pole Debye Model and then use machine learning models (Linear Regression, Polynomial Regression, Gradient Boosting, and KNN), on this data, to estimate the dielectric properties. We compare the values predicted by machine learning models with those given by the analytical model. Our investigation shows that the Gradient Boosting method has better prediction performance. Further, we have also validated these results using Origin software employing curve fitting technique. In addition, the research also contributes to the study of data expansion by predicting unknown data based on available experimental data, emphasizing the broader applicability of machine learning in biomedical research. The study’s conclusions enhance areas like non-invasive sensing in the context of 6G, which may improve data and monitoring in a networked healthcare environment.

- Published in CSE NEWS, Departmental News, News, Research News

An Inventive Navigation System for the Visually Impaired

The Department of Computer Science and Engineering is proud to announce that the patent titled “A System and a Method for Assisting Visually Impaired Individuals” has been published by Dr Subhankar Ghatak and Dr Aurobindo Behera, Asst Professors, along with UG students, Mr Samah Maaheen Sayyad, Mr Chinneboena Venkat Tharun, and Ms Rishitha Chowdary Gunnam. Their patent introduces a smart solution to help visually impaired people navigate busy streets more safely. The research team uses cloud technology to turn this visual information into helpful vocal instructions that the users can hear through their mobile phones. These instructions describe things like traffic signals, crosswalks, and obstacles, making it easier for them to move around independently, making way for an inclusive society.

Abstract

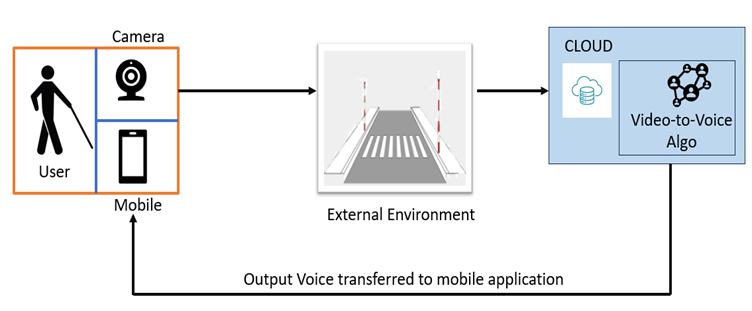

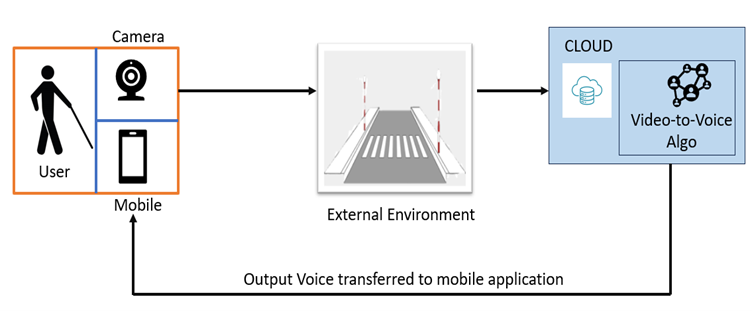

This patent proposes a novel solution to ease navigation for visually impaired individuals. It integrates cloud technology, computer vision algorithms, and Deep Learning Algorithms to convert real-time visual data into vocal cues delivered through a mobile app. The system employs wearable cameras to capture visual information, processes it on the cloud, and delivers relevant auditory prompts to aid navigation, enhancing spatial awareness and safety for visually impaired users.

Practical implementation/Social implications of the research

The practical implementation of the research involves several key components.

- Developing or optimising wearable camera devices that are comfortable and subtle for visually impaired individuals. These cameras should be capable of capturing high-quality real-time visual data.

- A robust cloud infrastructure is required to process this data quickly and efficiently using advanced computer vision algorithms and deep learning algorithms.

- Design and develop a user-friendly mobile application that delivers processed visual information as vocal cues in real-time. This application should be intuitive, customisable, and accessible to visually impaired users.

Fig.1: Schematic representation of the proposal

The social implications of implementing this research are significant. We can greatly enhance their independence and quality of life by providing visually impaired individuals with a reliable and efficient navigation aid. Navigating city environments can be challenging and hazardous for the visually impaired, leading to increased dependency and reduced mobility. The research aims to mitigate these challenges by empowering users to navigate confidently and autonomously. This fosters a more inclusive society where individuals with visual impairments can participate actively in urban mobility, employment, and social activities.

In the future, the research cohort plans to further enhance and refine technology to better serve the needs of visually impaired individuals. This includes improving the accuracy and reliability of object recognition and scene understanding algorithms to provide more detailed and contextually relevant vocal cues. Additionally, they aim to explore novel sensor technologies and integration methods to expand the capabilities of our system, such as incorporating haptic feedback for enhanced spatial awareness. Furthermore, we intend to conduct extensive user testing and feedback sessions to iteratively improve the usability and effectiveness of our solution. This user-centric approach will ensure that our technology meets the diverse needs and preferences of visually impaired users in various real-world scenarios.

Moreover, the team is committed to collaborating with stakeholders, including advocacy groups, healthcare professionals, and technology companies, to promote the adoption and dissemination of this technology on a larger scale. By fostering partnerships and engaging with the community, they can maximise the positive impact of their research on the lives of visually impaired individuals worldwide.

- Published in CSE NEWS, Departmental News, News, Research News

Dr Manjula R and Students Publish Book Chapter on Machine Learning in 6G Networks

In an exciting development, Dr Manjula R, Assistant Professor in the Department of Computer Science and Engineering, along with B.Tech. students Mr Adi Vishnu Avula, Mr Jawad Khan, Mr Chiranjeevi Thota, and Ms Venkata Kavyanjali Munipalle, have authored a book chapter titled “Machine Learning Approach to Determine and Predict the Scattering Coefficients of Myocardium Tissue in the NIR Band for In-Vivo Communications – 6G Network in book name “Edge-Enabled 6G Networking: Foundations, Technologies, and Applications”.

In an exciting development, Dr Manjula R, Assistant Professor in the Department of Computer Science and Engineering, along with B.Tech. students Mr Adi Vishnu Avula, Mr Jawad Khan, Mr Chiranjeevi Thota, and Ms Venkata Kavyanjali Munipalle, have authored a book chapter titled “Machine Learning Approach to Determine and Predict the Scattering Coefficients of Myocardium Tissue in the NIR Band for In-Vivo Communications – 6G Network in book name “Edge-Enabled 6G Networking: Foundations, Technologies, and Applications”.

This achievement highlights the innovative research and collaboration showcase the dedication and expertise of both faculty and students in the field of computer science and engineering. The book chapter explores the cutting-edge advancements in 6G networking and its potential applications, shedding light on the future of communication technologies.

We congratulate Dr Manjula R and the team of talented students on this significant accomplishment and look forward to seeing more groundbreaking research from them in the future. Stay tuned for more updates on their work and achievements.

Abstract

The accurate calculation of the scattering coefficient of biological tissues (myocardium) is critical for estimating the path losses in prospective 6-G in-vivo Wireless Nano sensor networks (i-WNSN). This research explores machine learning’s potential to promote non-invasive procedures and improve in-vivo diagnostic system’s accuracy while determining myocardium’s scattering properties in the Near Infrared (NIR) frequency. We begin by presenting the theoretical model used to estimate and calculate scattering coefficients in the NIR region of the EM spectrum. We then provide numerical simulation results using the scattering coefficient model, followed by machine learning models such as Linear Regression, Polynomial Regression, Gradient Boost and ANN (Artificial Neural Network) to estimate the scattering coefficients in the wavelength range 600-900 nm.

We next contrast the values provided by the analytical model with those predicted via machine learning models. In addition, we also investigate the potential of machine learning models in producing new data sets using data expansion techniques to forecast the scattering coefficient values of the unavailable data sets. Our inference is that machine learning models are able to estimate the scattering coefficients with very high accuracy with gradient boosting performing better than other three models. However, when it comes to the prediction of the extrapolated data, ANN is performing better than other three models.

Keywords: 6G, In-vivo, Dielectric Constant, Nano Networks, Scattering Coefficient, Machine Learning.

Significance of Book Chapter

The human heart is a vital organ of the cardiovascular system and is very crucial for any living being. However, this organ is prone to several diseases—Cardiovascular Disease (CVD)—an umbrella term. CVDs are the set of the heart diseases that comprises heart attack, cardiac arrest, arrhythmias, cardiomyopathy, atherosclerosis to name a few. CVD alone account for most of the deaths across the globe and is estimated reach 23.3 million deaths due to CVD by 2030. Early detection and diagnosis of CVD is the ultimate solution to mitigate these death rates. Current diagnostic tests include, however not the exhaustive list, ECG, blood test, cardiac x-ray, angiogram.

The limitations of these techniques include bulkiness of the equipment, cost, tests are suggested only when things are in critical stage. To alleviate these issues, we are now blessed with on-body or wearable devices such as smart watches that collect timely information about the cardiac health parameters and notify the user in a real-time. However, these smart watches do not have the capability to directly detect the presence of plaque in the arteries that leads to atherosclerosis. These devices have the capability to track certain health parameters such as heart rate, blood pressure, other activity levels, any deviation in the measured values of these parameters from the normal values might give an indication of cardiac health issues. This requires a formal diagnostics test such as cardiac catheterization or cardiac x-ray leading to the original problem.

Therefore, in this work we aim to mitigate these issues by proposing the usage of prospective medical grade nanorobots—called nanosurgeons, that can provide real-time live information on the health condition of the internal body. Particularly, our work assumes that these tiny nanobots are injected into the cardiovascular system that keep circulating along with the blood to gather health information. Such nanosized robots are typically expected to work in the terahertz band owing to their size. At such high frequency, the terahertz signals are prone to high path losses due to spreading, absorption and scattering of the signal during propagation. Our work aims at understanding these losses, especially the scattering losses, of the terahertz signal in the NIR band (600-900 nm) using the existing models, analytically. Further, to understand the strength of machine learning in predicting these scattering losses, we also carryout simulation work to estimate and predict the scattering losses using Linear Regression, Polynomial Regression, Gradient Boost and Artificial Neural Network (ANN) models.

Our preliminary investigation suggests scattering losses are minimal in NIR band and machine learning can be seen as a potential candidate for perdiction of scattering losses using the available experimental data as well as using data augmentation techniques to predict the scattering losses at those frequencies for which either experimental data is not available or can prevent the use of costly equipment to determine these parameters.

- Published in Computer Science News, CSE NEWS, Departmental News, News, Research News

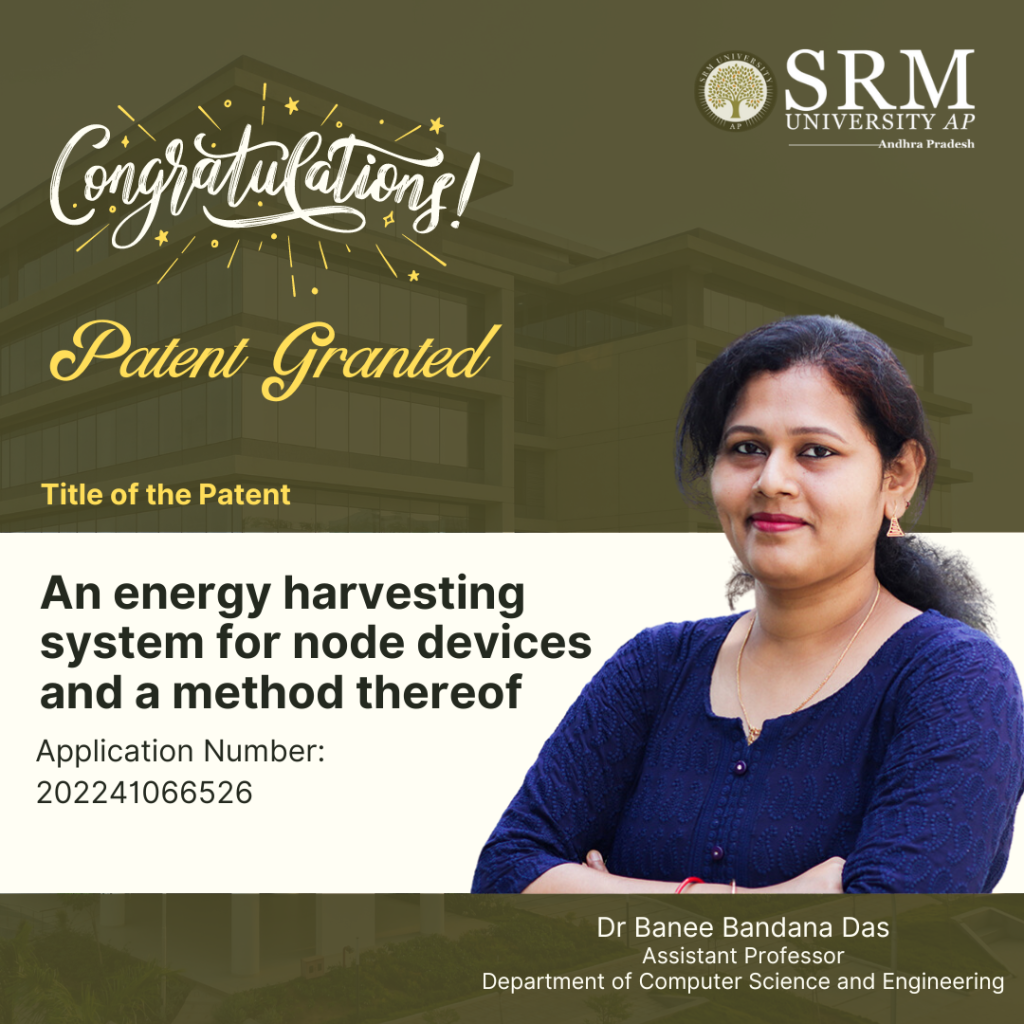

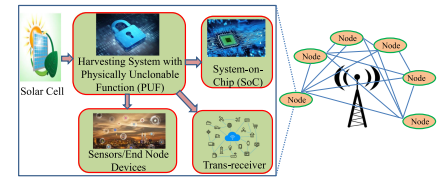

Revolutionising Energy Harvesting: Dr Banee Banadana Receives Patent for Innovative System

Dr Banee Bandana Das, Assistant Professor in the Department of Computer Science and Engineering, has achieved a remarkable milestone. The invention titled “An Energy Harvesting System for Node Devices and a Method Thereof” has been granted a patent by the Patent Office Journal, under Application Number: 202241066526. This achievement marks a significant leap forward in the realm of energy harvesting systems, promising a brighter and more secure future for IoT applications.

Abstract

The present invention is broadly related to design of secure and Trojan Resilient energy harvesting system (EHS) for IoT end node devices. The objective is to develop a state-of-the-art energy harvesting system which can supply uninterrupted power to the sensors used in IoT. The EHS is self-sustainable. The higher bias voltages are generated on chip. The system is mainly consisting of security module, power conditioning module, Trojan Resilient module, and load controller module. The power failure of the sensors used in IoT may leads to information loss thereby causing catastrophic situations. An uninterrupted power supply is a must for smooth functioning of the devices in IoT. This invention caters secure power requirements with security issues of IoT end node devices.

Practical Implementation:

The IoT end node devices needs 24*7 power supply and are very sensitive to attacks made by adversaries before and after fabrication. This invention takes care of the power requirement of end node devices with green energy and secure the EHS-IC from adversaries and attacks and therefore can be used by individuals, as powering sensors at remote locations and as part of smart agriculture.

Future research plans:

Design more secure and reliable design for making a IoT smart node smarter and self-Sustainable. Exploring more circuit level techniques and find new way to design more power efficient designs.

- Published in Computer Science News, CSE NEWS, Departmental News, Research News

Dr Banee Bandana Das, Assistant Professor in the Department of Computer Science and Engineering, has achieved a remarkable milestone. The invention titled “An Energy Harvesting System for Node Devices and a Method Thereof” has been granted a patent by the Patent Office Journal, under Application Number: 202241066526. This achievement marks a significant leap forward in the realm of energy harvesting systems, promising a brighter and more secure future for IoT applications.

Abstract

The present invention is broadly related to design of secure and Trojan Resilient energy harvesting system (EHS) for IoT end node devices. The objective is to develop a state-of-the-art energy harvesting system which can supply uninterrupted power to the sensors used in IoT. The EHS is self-sustainable. The higher bias voltages are generated on chip. The system is mainly consisting of security module, power conditioning module, Trojan Resilient module, and load controller module. The power failure of the sensors used in IoT may leads to information loss thereby causing catastrophic situations. An uninterrupted power supply is a must for smooth functioning of the devices in IoT. This invention caters secure power requirements with security issues of IoT end node devices.

Practical Implementation:

The IoT end node devices needs 24*7 power supply and are very sensitive to attacks made by adversaries before and after fabrication. This invention takes care of the power requirement of end node devices with green energy and secure the EHS-IC from adversaries and attacks and therefore can be used by individuals, as powering sensors at remote locations and as part of smart agriculture.

Future research plans:

Design more secure and reliable design for making an IoT smart node smarter and self-Sustainable. Exploring more circuit level techniques and find new way to design more power efficient designs.

- Published in Computer Science News, CSE NEWS, Departmental News, Research News

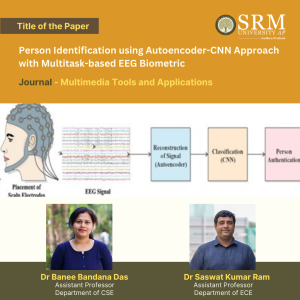

Unleashing the Power of Neuroscience: Paper on Person Identification

In a remarkable academic achievement, Dr Banee Bandana Das, Assistant Professor in Department of Computer Science and Engineering and Dr. Saswat Kumar Ram, Assistant Professor in Department of Electronics and Communication Engineering, have made significant contributions to the field of biometric security. Their paper, titled “Person Identification using Autoencoder-CNN Approach with Multitask-based EEG Biometric,” has been published in the esteemed ‘Multimedia Tools and Applications journal, which is recognised as a Q1 journal with an impressive impact factor of 3.6.

In a remarkable academic achievement, Dr Banee Bandana Das, Assistant Professor in Department of Computer Science and Engineering and Dr. Saswat Kumar Ram, Assistant Professor in Department of Electronics and Communication Engineering, have made significant contributions to the field of biometric security. Their paper, titled “Person Identification using Autoencoder-CNN Approach with Multitask-based EEG Biometric,” has been published in the esteemed ‘Multimedia Tools and Applications journal, which is recognised as a Q1 journal with an impressive impact factor of 3.6.

This pioneering work showcases a novel approach to person identification using electroencephalogram (EEG) data. The research leverages the power of Autoencoder-CNN models combined with multitask learning techniques to enhance the accuracy and reliability of EEG-based biometric systems.

The publication of this paper not only underscores the high-quality research conducted at SRM University-AP but also places the institution at the forefront of innovative developments in biometric technology. It is a testament to the university’s commitment to advancing scientific knowledge and providing its faculty with a platform to impact the global research community positively.

Abstract

In this research paper, we propose an unsupervised framework for feature learning based on an autoencoder to learn sparse feature representations for EEG-based person identification. Autoencoder and CNN do the person identification task for signal reconstruction and recognition. Electroencephalography (EEG) based biometric system is vesting humans to recognise, identify and communicate with the outer world using brain signals for interactions. EEG-based biometrics are putting forward solutions because of their high-safety capabilities and handy transportable instruments. Motor imagery EEG (MI-EEG) is a maximum broadly centered EEG signal that exhibits a subject’s motion intentions without real actions. The Proposed framework proved to be a practical approach to managing the massive volume of EEG data and identifying the person based on their different task with resting states.

The title of Research Paper in the Citation Format

Person identification using autoencoder-CNN approach with multitask-based EEG biometric. Multimedia Tools Appl (2024).

Practical implementation/social implications of the research

- To develop a personal identification system using MI-EEG data.

- This work is about an Autoencoder-CNN-based biometric system with EEG motor imagery inputs for dimensionality reduction and denoising (extracting original input from noisy data).

- The designed Autoencoder-CNN-based biometric architecture to model MI-EEG signals is efficient for cybersecurity applications.

Collaborations

- IIITDM, Kurnool, India

- National Institute of Technology, Rourkela, India

- University of North Texas, Denton, USA

Future Research Plan

In the future, different deep learning and machine learning methods can be merged to explore better performance in this EEG-based security field and other signal processing areas. We will investigate the robustness deep learning architectures to design a multi-session EEG biometric system.

- Published in CSE NEWS, Departmental News, ECE NEWS, News, Research News

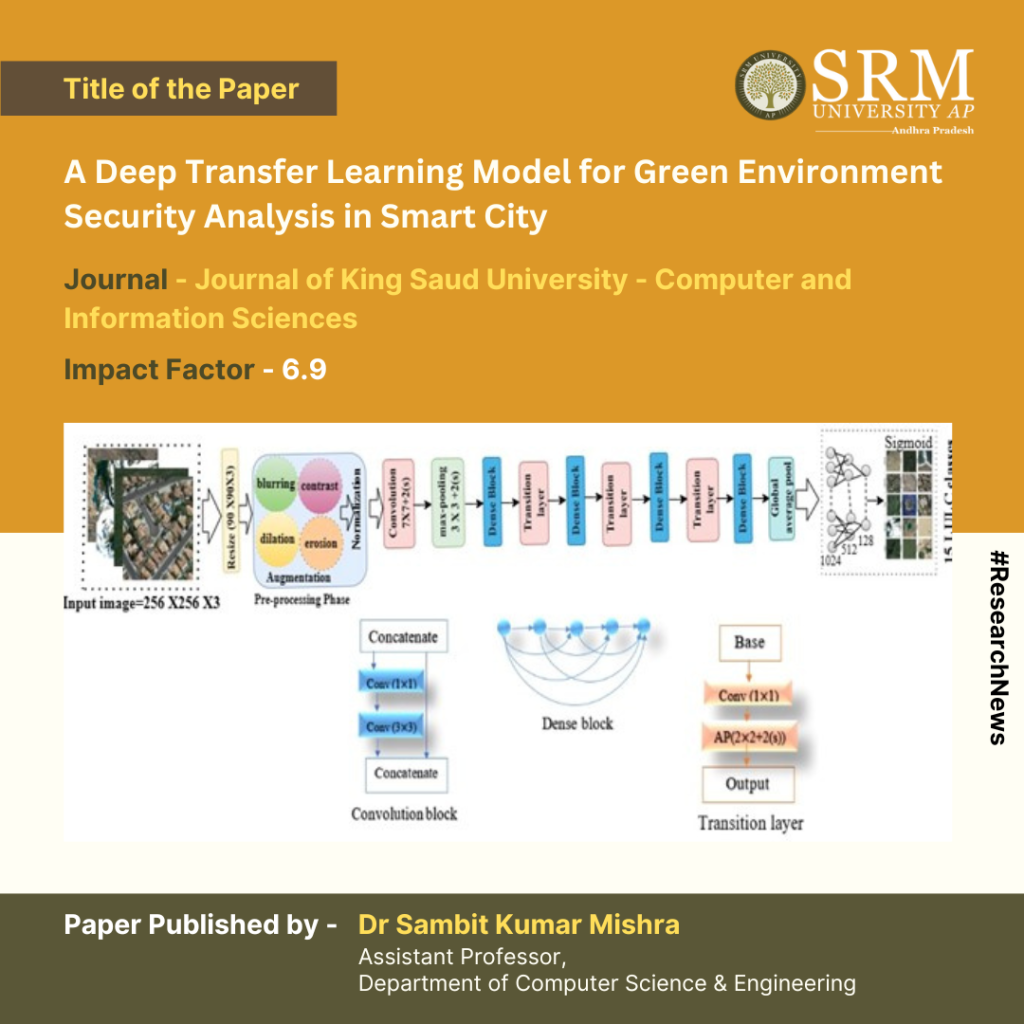

Deep Transfer Learning for Green Environment Security in Smart Cities

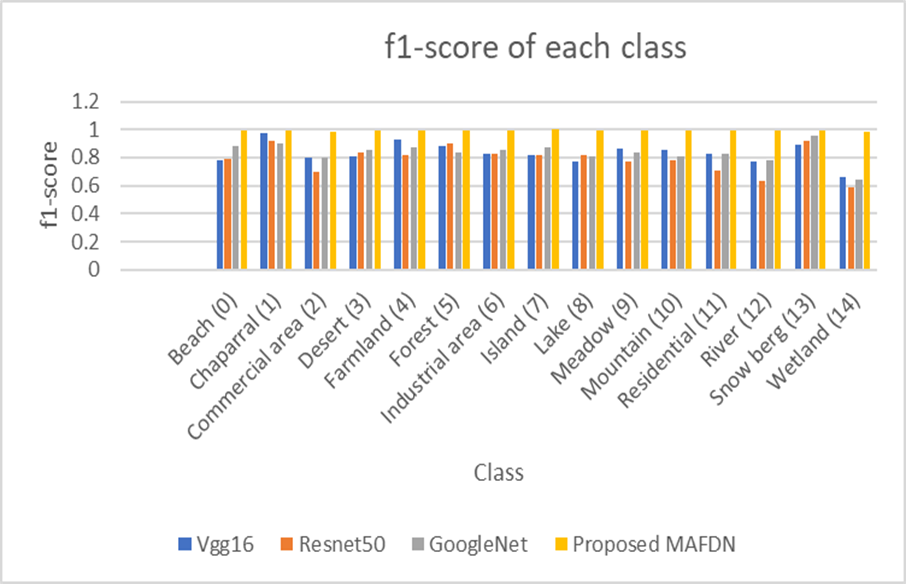

The Department of Computer Science and Engineering is pleased to announce an extraordinary research paper titled “A Deep Transfer Learning Model for Green Environment Security Analysis in Smart City“, authored by Dr Sambit Kumar Mishra, Assistant Professor, was published in the Journal of King Saud University – Computer and Information Sciences that falls within the Q1 quartile with an Impact Factor (IF) of 6.9. The study introduces a model to automatically classify high-resolution scene images for environmental conservation in smart cities. By enhancing the training dataset with spatial patterns, the model improves green resource management and personalised services. It also demonstrates the effectiveness of LULC classification in smart city environments using transfer learning. Data augmentation techniques improve model performance, and optimisation methods enhance efficiency, contributing to better environmental management.

Abstract

The research addresses the importance of green environmental security in smart cities and proposes a morphologically augmented fine-tuned DenseNet121 (MAFDN) model for Land Use and Land Cover (LULC) classification. This model aims to automate the categorisation of high spatial resolution scene images to facilitate green resource management and personalised services.

Dr Mishra collaborated with Dr Rasmita Dash and Madhusmita Sahu from SoA Deemed to be University, India, as well as Mamoona Humayun, Majed Alfayad, and Mohammed Assiri from universities in Saudi Arabia.

His plans include optimising the model using pruning methods to create lightweight scene classification models for resolving challenges in LULC datasets.

- Published in CSE NEWS, Departmental News, News