OCIT-2024: An International Conference Exploring Trends in Information Technology

The 22nd OITS International Conference on Information Technology (OCIT-2024) was hosted at SRM University-AP from December 12 to 14, 2024. The conference, which served as a premier platform to discuss advancements in information technology, brought together distinguished academics, researchers, industry professionals, and students from across the globe. OCIT-2024 witnessed a submission of 600 research papers, out of which 136 papers were accepted and registered for presentation after rigorous review.

OCIT 2024 had seven keynote speakers who delivered insightful presentations, offering deep expertise and futuristic perspectives on emerging trends: Prof. Arun Kumar Pujari, HOD of AI & CSE, Adviser & Professor Emeritus Mahindra University, Prof. Banshidhar Majhi, Professor (HAG), Comp Sc.& Engg. NIT Rourkela, Former Director, IIITDM KP, Former VC, VSSUT Burla, Prof. Prabir Kumar Biswas, IIT Kharagpur, Prof. Sharad Sharma (University of North Texas UNT), Dr Kannan Srinathan (IIIT Hyderabad), Prof. Siba Kumar Udgata (University of Hyderabad), Dr Kumar Goutam (Founder & President of QRACE).

Esteemed session chairs guided thought-provoking discussions, elevating the intellectual rigour of the conference. Presenters also shared cutting-edge research, fostering a vibrant exchange of ideas and innovation. The topics explored ranged from artificial intelligence and machine learning to advanced networking and quantum computing. The conference emphasised the importance of collaboration between academia and industry in addressing global challenges and shaping the future.

The conference concluded on a high note, inspiring participants to continue their pursuit of excellence in research and innovation. 12 papers were recognised as the best papers for their exceptional quality and contribution. All 136 accepted papers will be published in IEEE Xplore (Scopus Indexed). Additionally, approximately 15% of the papers will receive extended invitations for submission to peer-reviewed journals.

OCIT-2024 was a remarkable event, leaving a lasting impact on its participants and setting the stage for future technological advancements. The event celebrated the spirit of innovation and research through well-curated presentations.

- Published in CSE NEWS, Departmental News, News, Research Events, Research News

Dr Gavaskar Publishes Patent on Generating Prompts

The Department of Computer Science and Engineering is proud to announce that Dr S Gavaskar has published his patent titled A System for Generating Prompts for Generative Artificial Intelligence (AI) Applications (Application Number: 202441091788). This groundbreaking invention will prove to be a significant advancement in the field of AI, enabling the creation of contextually rich and user-specific prompts, thereby enhancing the accuracy and usability of generative AI systems across various domains.

A Brief Abstract:

This research invention relates to Prompt creation process for Generative AI Application by introducing Four way corpus directory(FWCD) comprising the Persona corpus, Localized Application Specific Content Corpus, Annotator corpus, and Stopword corpus to create well-formed, contextual prompts for AI models. It also employs Semantic Based Categorization and Ranking(SBCR) for semantically categorizing and ranking the content present in the Localized Application Specific Content Corpus . The invention improves the interaction between users and Generative AI. It helps deliver more accurate, semantic based outputs from the AI models, improving the overall performance and usability of the system.

Explanation in Layperson’s Terms:

Prompt engineering is the process of using natural language to create instructions that generative artificial intelligence (AI) models can understand and interpret.In this research we have create a system for prompt creation by which the users can create their own prompt with the combination of their persona details,stopword,annotator content from their localized corpus directory before applying to the LLM models such as ChatGPT,Copilot etc.

Practical Implementation / Social Implications:

- This concept can be implemented in Education Institutions to generate tailored prompts for learning materials and academic projects.Enterprises can use localized content to generate role-specific prompts. and marketing organizations can use to create prompts aligned with specific campaigns or audience demographics.It can also be used in organizations where multiple persons with different roles and responsibilities are there and they have their own localized content for which a prompt has to be created for an Generative AI Application.

This invention also lets people from different skill levels access the system and create their own prompt for their applications.

Future Research Plans:

Future research focus is on creating LLM and AI related applications to the field related to education.cyber security,Legal and Enterprises.

- Published in CSE NEWS, Departmental News, News, Research News

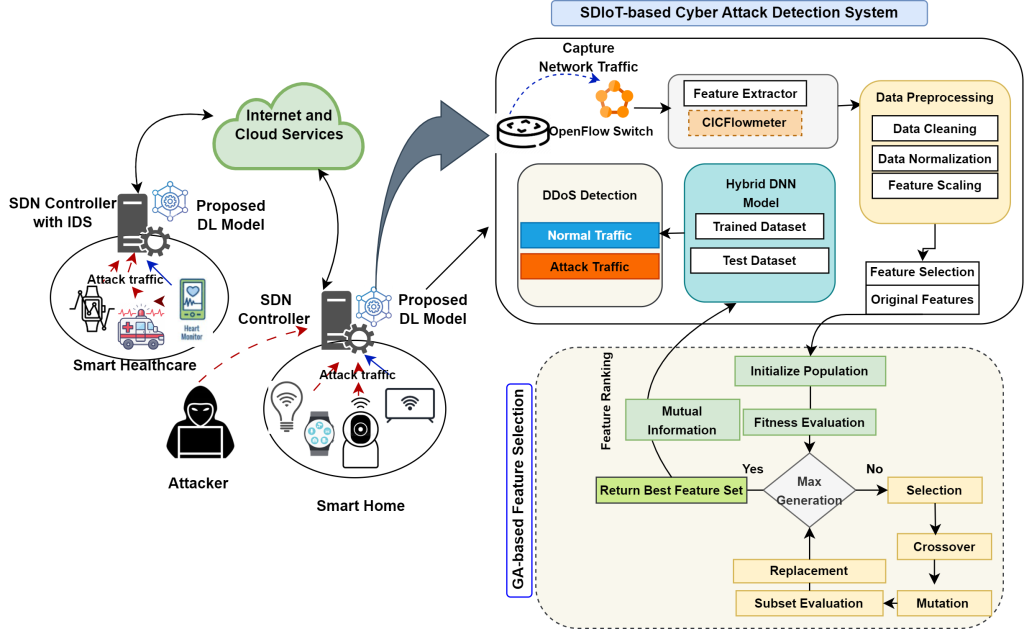

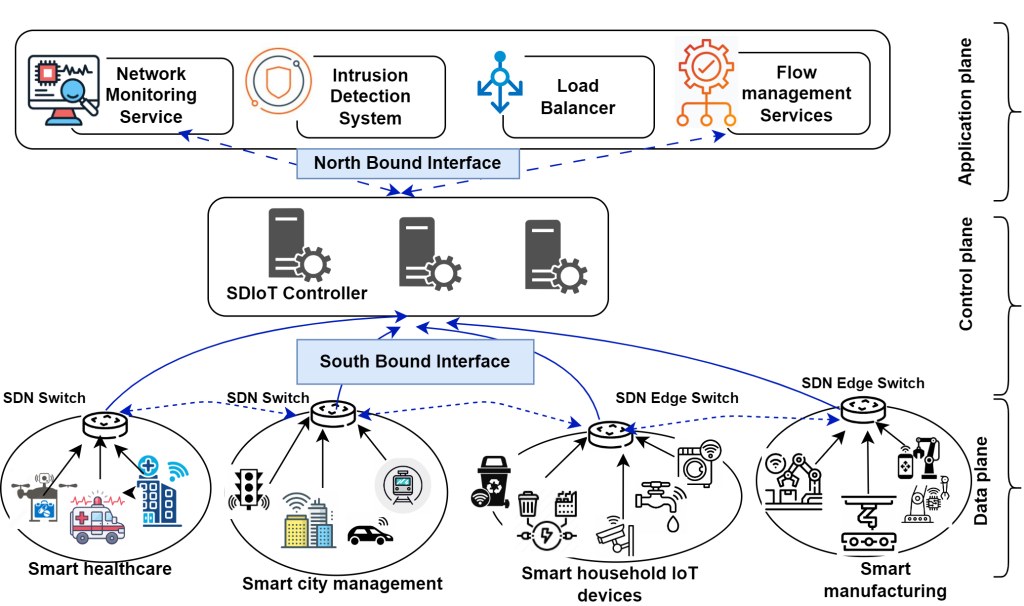

A Unified Learning Framework for Detecting Cyberattacks in IoT Networks

The Department of Computer Science and Engineering is proud to announce that Assistant Professors Dr Kshira Sagar Sahoo and Dr Tapas Kumar Mishra, along with their research scholar Ms Arati Behera, have published their paper “A Combination Learning Framework to Uncover Cyberattacks in IoT Networks” in the prestigious Q1 journal Internet of Things, which has an Impact factor of 6.

The article addresses IoT security challenges by utilising Software Defined Networking technology and AI. The authors use Genetic Algorithm to select the most important data features and a hybrid deep learning model combining CNN, Bi-GRU, and Bi-LSTM to detect cyber-attacks effectively. Tested on real-world IoT datasets, the system demonstrates superior accuracy, faster detection, and lower resource usage than existing methods, making it a promising solution for securing resource-constrained IoT networks.

Abstract

This study addresses the security challenges in IoT networks, focusing on resource constraints and vulnerabilities to cyber-attacks. Utilising Software Defined Networking and its adaptability, the authors propose an efficient security framework using a Genetic Algorithm for feature selection and Mutual Information (MI) for feature ranking. A hybrid Deep Neural Network (DNN) combining CNN, Bi-GRU, and Bi-LSTM is developed to detect attacks. Evaluated on InSDN, UNSW-NB15, and CICIoT 2023 datasets, the model outperforms existing methods in accuracy, detection time, MCC, and resource efficiency, demonstrating its potential as a scalable and effective solution for IoT network security.

Practical Implementation/ Social Implications of the Research

The practical implementation of this research lies in enhancing the security of IoT networks, which are increasingly integral to smart homes, healthcare, transportation, and industrial systems. By detecting and mitigating cyber-attacks efficiently, the proposed model can safeguard sensitive data, prevent service disruptions, and ensure the reliability of IoT systems.

Collaborations

This research has been conducted in partnership with Umea University Sweden.

Future Research Plans

There is potential to enhance the Deep Learning approach further to reduce the execution time at the power crunch device. Additionally, federated learning could be investigated as a use case, especially concerning edge devices within typical software-defined IoT networks.

- Published in CSE NEWS, Departmental News, News, Research News

A Blockchain and IoT-Driven Solution for Farmers

Farming is often regarded as an occupation that is challenging and has become a sobriquet for hardship and unpredictability, leaving farmers financially vulnerable and many a times at the brink of poverty. Insuring crops can, however, minimise the risk of loss, making it a viable option as long as the process doesn’t get bogged down by excessive bureaucracy and cumbersome paperwork. Dr Naga Sravanthi Puppala, Assistant Professor at the Department of Computer Science and Engineering has come up with a game-changing solution of utilising blockchain technology and real time IoT data for automatic and quick coverage of crops by simplifying the insurance process thereby helping reshaping the future of agriculture, just when it’s needed most.

Farming is often regarded as an occupation that is challenging and has become a sobriquet for hardship and unpredictability, leaving farmers financially vulnerable and many a times at the brink of poverty. Insuring crops can, however, minimise the risk of loss, making it a viable option as long as the process doesn’t get bogged down by excessive bureaucracy and cumbersome paperwork. Dr Naga Sravanthi Puppala, Assistant Professor at the Department of Computer Science and Engineering has come up with a game-changing solution of utilising blockchain technology and real time IoT data for automatic and quick coverage of crops by simplifying the insurance process thereby helping reshaping the future of agriculture, just when it’s needed most.

Abstract

The invention is a groundbreaking design patent that employs a single, sophisticated smart contract policy to autonomously manage the entire crop insurance process. This system innovatively combines blockchain technology with real-time IoT data collection to create an efficient, transparent, and reliable insurance solution for farmers. Central to this invention is a singular smart contract policy designed to oversee every stage of the insurance lifecycle, from policy issuance and dynamic risk assessment to claims processing and payout disbursement. This smart contract policy is meticulously programmed with specific conditions and thresholds, including weather patterns, soil moisture levels, and crop health indicators, all monitored by IoT devices in the field. As these conditions are tracked in real-time, the smart contract policy autonomously adjusts coverage and triggers payouts when necessary, eliminating the need for human intervention. This system not only enhances efficiency by reducing administrative costs but also ensures prompt and accurate payouts. By relying on tamper-proof data and predefined conditions, the invention offers a secure and transparent approach to crop insurance, providing farmers with a dependable safety net against crop losses.

In short, this invention makes crop insurance smarter, simpler, and fairer, giving farmers the support they need when they need it most.

Practical Implementation and Social Implications of the Research

Practical Implementation

- Blockchain: Secure platform for immutable records.

- Smart Contracts: Automate insurance claims based on predefined triggers.

- IoT Devices: Monitor crop and environmental data in real-time.

- Oracles: Fetch external data (e.g., weather reports).

- Workflow: Farmers enroll, pay premiums digitally, and receive automatic payouts if crop damage is detected.

Social Implications

- Transparency: Eliminates fraud and delays in claims.

- Inclusivity: Provides insurance access to small-scale farmers.

- Economic Stability: Reduces financial strain on farmers after disasters.

- Sustainability: Encourages data-driven, risk-resilient agriculture.

Future Research Plans:

Building upon the foundation of this invention, my future research will focus on advancing and expanding its applications to maximize impact in agriculture and beyond. Key areas of exploration include:

1. Enhancing IoT Integration for Precision Agriculture

Aimed to develop more advanced IoT devices and sensors that can collect highly specific data on soil quality, weather patterns, and crop health. This data will improve the system’s ability to predict risks and tailor insurance policies to individual farms. Research will also involve optimizing sensor networks for affordability and accessibility to smallholder farmers.

2. Developing Dynamic Risk Assessment Models

By incorporating machine learning and predictive analytics, I plan to create dynamic risk assessment models. These models will continuously learn from real-time data and historical trends, allowing the system to provide proactive alerts to farmers about potential risks and automatically adjust insurance terms to reflect current conditions.

3. Expanding Blockchain Applications Beyond Crop Insurance

While the current focus is on crop insurance, blockchain’s secure and transparent nature offers opportunities for broader agricultural applications. I intend to explore its use for supply chain traceability, ensuring that crops reach markets efficiently and without tampering, and for facilitating peer-to-peer lending among farmers.

4. Testing and Scaling in Diverse Agricultural Environments

Field trials will be conducted in various regions and farming contexts to test the system’s adaptability and scalability. This includes:

- Testing in regions prone to extreme weather conditions.

- Evaluating the system’s performance in specialized farming industries, such as vineyards or organic farming.

- Collaborating with agricultural cooperatives to implement the system across multiple farms simultaneously.

5. Social and Economic Impact Assessment

A critical part of my research will involve studying the socioeconomic impact of this invention on farmers, particularly smallholder farmers. I aim to assess how it influences their livelihoods, productivity, and financial security. This will guide future improvements to make the system more inclusive and equitable.

6. Exploring Policy and Regulatory Frameworks

For widespread adoption, I plan to engage with policymakers to align the system with existing agricultural and insurance regulations. The research will focus on creating policy frameworks that encourage adoption, particularly in developing regions and addressing potential legal challenges related to blockchain and data privacy.

7. Collaborating for Multi-Sectoral Impact

Partnerships with financial institutions, agritech companies, and government agencies to co-develop solutions that integrate blockchain-based insurance with other agricultural services, such as microloans, subsidies, and educational programs.

By addressing these areas, my research will contribute to creating a more resilient and sustainable agricultural ecosystem, empowering farmers with cutting-edge technology while enhancing food security and economic stability globally.

- Published in CSE NEWS, Departmental News, News, Research News

SecureX Hackathon: Students Shine at the Cybersecurity Codefest

The Cybersecurity Hackathon “Crack the Code & Secure the Future”, held at SRM University-AP in collaboration with BSI Learning on November 23-24, 2024, marked a significant achievement, bringing together students, professionals, and experts in the field to tackle real-time cybersecurity challenges. The event provided participants with hands-on expertise and the opportunity to design innovative solutions aimed at addressing the growing concerns in the field of cybersecurity.

The hackathon opened with an inspiring speech by the Dean of Research Prof. Ranjit Thapa, who emphasised the university’s dedication to fostering innovation and research from the very beginning of a student’s academic journey. He highlighted the importance of the event, stating that all participants would receive certificates of appreciation, reinforcing the university’s commitment to recognising and motivating talent.

Mr Vipul Rastogi, the keynote speaker, expressed his admiration for SRM University-AP, particularly impressed by the institution’s provision of seed funding for undergraduate students, something he had not encountered elsewhere. Mr Vipul, a seasoned cybersecurity expert, stressed the growing demand for cybersecurity professionals, citing that 40% of cybersecurity positions remain vacant due to the rapidly expanding threat landscape.

Mr Chris Chan, the Cybersecurity Education Lead and Consultant at BSI Learning, Australia, provided valuable insights into the intricacies of cybersecurity, including areas such as penetration testing, cloud security, governance, risk, and compliance. Mr Chan also elaborated on the importance of user education, vulnerability management, and organisational resilience in mitigating cybersecurity risks.

Participants, divided into 22 teams with 81 members, were challenged to design and implement innovative solutions addressing one of the following key cybersecurity areas: Prevention and User Education, Detection and Monitoring, Response and Mitigation and Policy Compliance and Business Continuity.

The event focused on fostering a collaborative environment where participants could experiment, innovate, and deliver real-time solutions to cybersecurity problems. Team Tech Blazers won the first prize of 800 AUD, while Team Secure Ops and Team Soul Society won the second and third place of 500 AUD and 200 AUD respectively. All teams contributed actively to providing outstanding solutions, adding a competitive yet educational aspect to the hackathon.

The event concluded with a vote of thanks to Vipul Rastogi, Chris Chan, and all participants for their valuable contributions. The Cybersecurity Hackathon was a resounding success, showcasing the power of collaboration, innovation, and secure solutions in addressing the pressing challenges of today’s digital world.

The university looks forward to continuing such initiatives, further strengthening its role as a leader in research and innovation in the academic community.

- Published in CSE NEWS, Departmental News, News

CSE Student Publishes in Q1 Journal on Breakthrough Research in Water Technology

Exemplary student achievements in academia, research and industry are a testament to the excellence nurtured at the SRM University-AP. Mr Anwar Faizaan Reza, 2nd year B.Tech. student from the Department of Computer Science and Engineering has published his research paper in the prestigious Q1 journal Desalination. His paper titled “Multi-Layer Perceptron (MLP) Models for Water Treatment and Desalination Processes” provides a comprehensive review of Multi-Layer Perceptron (MLP) models as innovative tools in water treatment and desalination processes.

With the growing global water scarcity due to increasing population and urbanisation, the study emphasises the need for advanced technologies to optimise water management. Mr Reza discusses how MLPs, a type of artificial neural network, can effectively handle complex, non-linear data, making them suitable for predicting water quality and treatment efficiency.

The paper compares MLPs with traditional models, highlighting their advantages in adaptability and accuracy. It also addresses the limitations of MLPs, such as their dependence on high-quality training data and susceptibility to overfitting. Additionally, the research identifies existing gaps in the application of MLPs in real-world scenarios and suggests future opportunities for integration with other AI techniques and real-time data analysis.

In his paper, Mr Anwar Reza proposes that MLPs can significantly enhance decision-making in water treatment by providing accurate forecasts and optimizing operational processes. He also discusses the importance of developing user-friendly cost estimation tools for desalination projects and advocates for using MLPs in techno-economic assessments. Overall, the paper underscores the potential of MLPs to revolutionise water treatment and desalination, presenting them as vital components in addressing the challenges posed by water scarcity and quality management.

- Published in CSE NEWS, Departmental News, News, Students Achievements

A Novel Method to Estimate Parameters in Complex Biochemical Systems

With the advent of cutting-edge technology, Dr Abhijit Dasgupta, Assistant Professor at the Department of Computer Science and Engineering, has conducted breakthrough research in understanding biochemical systems with limited data in hand. His research has been published as a paper titled “Efficient Parameter Estimation in Biochemical Pathways: Overcoming Data Limitations with Constrained Regularization and Fuzzy Inference” in the Elsevier journal Expert Systems With Applications, having an impact factor of 7.5.

Abstract

This study introduces a new method to estimate parameters in biochemical pathways without relying on experimental data. The method called the Constrained Regularized Fuzzy Inferred Extended Kalman Filter (CRFIEKF) uses fuzzy logic to estimate parameters based on known but imprecise relationships between molecules. To handle complex and unstable data, the method incorporates Tikhonov regularisation, improving accuracy and stability. CRFIEKF was tested on several pathways, including glycolysis and JAK/STAT signalling, and reliable results were obtained. This approach offers a useful tool for estimating parameters in complex biochemical systems, especially when experimental data is limited.

Explanation of the Research in Layperson’s Terms

This research is about finding a new way to predict how living cells work, especially when scientists don’t have enough data from experiments. Normally, to understand how cells function, scientists need to collect a lot of information over time, which can be costly, difficult, or even impossible.

To solve this, the researchers developed a new method that doesn’t need as much experimental data. Instead of relying on exact measurements, their method uses fuzzy logic, which is like making smart guesses based on patterns and relationships we know, even if we don’t have perfect information. They also used a technique to keep these guesses steady and reliable, even when the data is messy or incomplete.

They tested this method on different biological processes, such as how cells turn food into energy (a process called glycolysis) and how cells send signals using proteins (like in the JAK/STAT pathway). The method worked well and gave accurate results.

In simple terms, this research helps scientists predict how cells behave without needing a lot of expensive and hard-to-get data, making it easier to study the complex systems inside living organisms.

Practical Implementation/Social Implications of the Research

The practical implementation of this research lies in its ability to accurately predict how biological systems, such as cells, function without relying heavily on time-consuming and costly experimental data. This method can be applied in various fields, including drug development, personalised medicine, and agriculture, where understanding complex biological processes is crucial. For instance, pharmaceutical companies could use this technique to model how a drug will interact with different biological pathways, speeding up the drug discovery process. Similarly, it could help tailor medical treatments to individual patients by predicting how their unique biological makeup will respond to specific therapies.

The broader social impact of this research is significant. By reducing the need for extensive experimental data, this method can lower the cost and time required for scientific discoveries in healthcare and biotechnology. This could lead to faster development of new medicines, more affordable healthcare solutions, and personalised treatments that improve patient outcomes. In agriculture, this method can help optimise crop growth and resilience, contributing to food security. Overall, this research provides a pathway for more efficient and cost-effective advancements in biology, healthcare, and environmental sciences, ultimately benefiting society by improving health and sustainability.

Collaborations

1. St Jude Children’s Research Hospital, Memphis, USA

2. University of California, San Diego, USA

3. Columbia University, New York, USA

4. Nantes Université, France

5. University of North Carolina at Chapel Hill, USA

6. Institute of Himalayan Bioresource Technology, Palampur, India

7. Indian Statistical Institute, Kolkata, India

8. Aliah University, Kolkata, India

9. Michelin India Private Limited, Pune, India

10. Gitam University, Bangalore, India

Future Research Plans

Building on the foundation laid by this research, the next steps will involve expanding its applications to more complex biological systems and personalised medicine. The following outlines a future roadmap:

1. From Time-Course Data to Pathway Enrichment and Single-Cell Modeling:

The current method, which estimates parameters without relying on time-course data, can be adapted to use time-course data when available. Time-course data captures how biological processes change over time, offering valuable insights. By integrating this data, we can refine the parameter estimation and achieve a more precise pathway enrichment analysis. This approach can be particularly beneficial in single-cell studies, where understanding the variability in cellular responses within complex diseases like cancer, diabetes, or neurodegenerative disorders is crucial. Modelling these pathways at the single-cell level will enable us to capture heterogeneity within tissues and improve disease understanding.

2. Simulating Pathways to Identify Drug Targets:

Once the pathways are enriched and modelled, we can simulate these biological networks to predict how different interventions—such as drugs—might influence the system. This simulation can help identify potential drug targets, particularly those that are critical in disease progression. For instance, by manipulating the modelled pathways, we can observe how specific proteins or molecules influence the disease state, providing insights into where a drug could be most effective.

3. Predicting Drug Side Effects:

After identifying potential drug targets, the next step is to predict the side effects of these interventions. The same model can simulate unintended consequences by analysing how modifying a target impacts other connected pathways. This simulation can provide early warnings about potential side effects, reducing the risk during later stages of drug development. Understanding these off-target effects at an early stage will be crucial for designing safer drugs.

4. Predicting Drug Molecules Using Generative Adversarial Networks (GANs):

Incorporating machine learning, particularly Generative Adversarial Networks (GANs), can take this research to the next level. GANs can be trained to generate new drug molecules by learning from existing drug databases. By feeding the pathway model’s identified targets into the GAN, we can generate candidate drug molecules that are predicted to interact with these targets effectively. This approach can significantly speed up the drug discovery process by automating the design of new drug candidates tailored to specific biological pathways.

5. Integration with Omics Data for Personalized Medicine:

The future of personalised medicine relies on integrating various layers of biological data—such as genomics, transcriptomics, proteomics, and metabolomics—into a cohesive model. By integrating pathway data with these other “omics” layers, this research will facilitate a more comprehensive understanding of individual patient biology. This integration allows for tailored treatment strategies, making personalised medicine more achievable. For instance, based on an individual’s genetic makeup and biological pathways, we can predict how they will respond to specific drugs and design personalised therapies with minimal side effects.

6. Pathway-Based Drug Design and Validation:

Once potential drug molecules are identified using GANs, they can be simulated within the enriched pathways to test their efficacy in silico. These simulations will allow researchers to understand how the drug interacts with the target pathway and its downstream effects. If the simulation shows promising results, the drug candidates can be prioritised for lab testing and clinical trials. This systematic approach, from modelling to simulation, could drastically reduce the time and cost associated with traditional drug discovery processes.

Summary

This plan transforms the current research from a powerful parameter estimation technique into a comprehensive framework for personalized medicine and drug discovery. By expanding to time-course data, single-cell modeling, pathway simulation, and integrating cutting-edge AI techniques like GANs, we can predict drug molecules tailored to individual biological systems. This personalized approach not only streamlines drug discovery but also enhances the safety and effectiveness of treatments, paving the way for more efficient and precise medical interventions.

- Published in CSE NEWS, Departmental News, News, Research News

Pioneering IoT Security: Dr Mohammad Published in Prestigious Q1 Journal

Dr Mohammad Abdussami, an Assistant Professor in the Department of Computer Science and Engineering, has made a significant contribution to the field of Internet of Things (IoT) security with the publication of his paper titled “APDEAC-IoT: Design of Lightweight Authenticated Key Agreement Protocol for Intra and Inter-IoT Device Communication Using ECC with FPGA Implementation.” This groundbreaking research has been published in the esteemed Q1 journal Computers and Electrical Engineering, which boasts an impact factor of 4.

Dr Mohammad Abdussami, an Assistant Professor in the Department of Computer Science and Engineering, has made a significant contribution to the field of Internet of Things (IoT) security with the publication of his paper titled “APDEAC-IoT: Design of Lightweight Authenticated Key Agreement Protocol for Intra and Inter-IoT Device Communication Using ECC with FPGA Implementation.” This groundbreaking research has been published in the esteemed Q1 journal Computers and Electrical Engineering, which boasts an impact factor of 4.

Dr Abdussami’s research addresses critical security challenges faced by IoT devices, particularly in facilitating secure communication between intra and inter-device networks. The lightweight authenticated key agreement protocol he has developed utilizes Elliptic Curve Cryptography (ECC) and Field-Programmable Gate Array (FPGA) implementation to enhance the security framework of IoT ecosystems.

As the adoption of IoT devices continues to expand across various sectors, the importance of robust security protocols cannot be overstated. Dr Abdussami’s work is poised to make a substantial impact on how devices communicate safely and efficiently, ensuring the integrity and confidentiality of data transmitted over the network.

As the demand for secure IoT solutions continues to grow, Dr Abdussami’s research stands as a beacon for future developments in this crucial area, potentially paving the way for safer and more efficient IoT interactions globally.

Abstract:

In this research work, we proposed a fog-enabled network architecture integrated with IoT devices (Intra and Inter-domain IoT devices) and developed the DEAC-IoT scheme using Elliptic Curve Cryptography (ECC) for secure authentication and key agreement. Our protocol is designed to protect device-to-device communication from security threats in resource-constrained IoT environments.

Citation format:

Abdussami Mohammad, Sanjeev Kumar Dwivedi, Taher Al-Shehari, P. Saravanan, Mohammed Kadrie, Taha Alfakih, Hussain Alsalman, and Ruhul Amin. “DEAC-IoT: Design of lightweight authenticated key agreement protocol for Intra and Inter-IoT device communication using ECC with FPGA implementation.” Computers and Electrical Engineering 120 (2024): 109696.

Explanation of the Research in Layperson’s Terms:

With more and more devices connecting wirelessly through the Internet of Things (IoT) (think of smart home gadgets, wearables, etc.), keeping their communications secure has become a big priority. However, many current communication methods for IoT devices don’t provide strong enough security. This leaves them open to cyber-attacks.

The challenge is to create a security system that is safe from attacks and doesn’t require too many computations. This is important because IoT devices often have limited resources (like low battery power or slower processors).

In this research, the authors have devised a solution: a new type of network setup (called fog-enabled architecture) that connects IoT devices with each other and with external devices. They’ve also developed a security protocol called DEAC-IoT, which uses Elliptic Curve Cryptography (ECC)—a highly efficient method for securing communications.

Their system makes it easier for IoT devices to authenticate (verify each other’s identity) and securely exchange keys (used to encrypt data), all while being lightweight enough to run on devices that don’t have a lot of processing power or energy.

In short: the paper offers a way to securely connect IoT devices with minimal computations, making communication between devices safe from hackers, even in environments where cyber threats are common.

Practical Implication and Social Implications Associated:

The practical implementation of this research can strengthen the security of IoT devices across many sectors, from homes and cities to healthcare and industries. The proposed DEAC-IoT scheme can also be used to implement vehicle to vehicle secure communication in autonomous vehicles, VANETs and Internet of Vehicles scenario.

Socially, it can enhance trust in IoT technology, protect privacy, safeguard critical infrastructure, and promote economic and technological development—while ensuring security remains affordable even in resource-constrained environments.

In Industrial IoT (IIoT) Scenario: In industries where machines are connected via IoT (such as in factories), devices need to communicate securely to ensure the smooth running of production lines. The DEAC-IoT protocol could secure these communications, preventing industrial espionage or sabotage.

Future Research Plans

1. Design of group key authentication protocols for IoT devices communication.

2. Design of handover authentication protocols for Fog-enabled IoT devices communication.

3. Design of quantum safe authentication protocols for vehicle-to-vehicle communication

Collaborations:

1. Dr Sanjeev Kumar Dwivedi, Centre of Artificial Intelligence, Madhav Institute of Technology and Science (MITS), Gwalior, Madhya Pradesh 474005, India.

2. Dr Taher Al-Shehari, Computer Skills, Department of Self-Development Skill, Common First Year Deanship, King Saud University, 11362, Riyadh, Saudi Arabia.

3. Dr Mohammed Kadrie, Computer Skills, Department of Self-Development Skill, Common First Year Deanship, King Saud University, 11362, Riyadh, Saudi Arabia

4. Dr P Saravanan, Department of Electronics and Communication Engineering, PSG College of Technology, Coimbatore, India.

5. Dr Ruhul Amin, Department of Computer Science & Engineering, IIIT Naya Raipur, Naya Raipur 493661, Chhattisgarh, India

6. Dr Taha Alfakih, Department of Information Systems, College of Computer and Information Sciences, King Saud University, Riyadh 11543, Saudi Arabia

7. Dr Hussain Alsalman, Department of Computer Science, College of Computer and Information Sciences, King Saud University, Riyadh 11543, Saudi Arabia

- Published in CSE NEWS, Departmental News, News, Research News

Enhancing Vehicle Security with Blockchain and Hybrid Computing

Dr Sriramulu Bojjagani, Assistant Professor, Department of Computer Science and Engineering and his research scholar, Ms Praneeta Supraneni, have proposed a secure and novel way to safeguard cars from being hacked, data breaches, and unauthorised access. Their research paper titled “Handover-Authentication Scheme for the Internet of Vehicles (IoV) using Blockchain and Hybrid Computing” will now improve transparency and traceability of your cars. Read the interesting abstract to learn more!

Dr Sriramulu Bojjagani, Assistant Professor, Department of Computer Science and Engineering and his research scholar, Ms Praneeta Supraneni, have proposed a secure and novel way to safeguard cars from being hacked, data breaches, and unauthorised access. Their research paper titled “Handover-Authentication Scheme for the Internet of Vehicles (IoV) using Blockchain and Hybrid Computing” will now improve transparency and traceability of your cars. Read the interesting abstract to learn more!

Abstract:

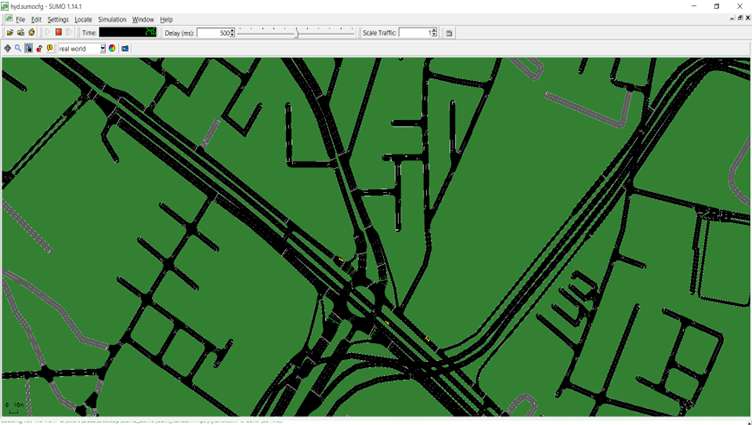

The advancements in telecommunications are significantly benefiting the Internet of Vehicles (IoV) in various ways. Minimal latency, faster data transfer, and reduced costs are transforming the landscape of IoV. While these advantages accompany the latest improvements, they also expand cyberspace, leading to security and privacy concerns. Vehicles rely on trusted authorities for registration and authentication processes, resulting in bottleneck issues and communication delays. Moreover, the central trusted authority and intermediate nodes raise doubts regarding transparency, traceability, and anonymity. This paper proposes a novel vehicle authentication handover framework leveraging blockchain, IPFS, and hybrid computing. The framework uses a Proof of Reputation (PoR) consensus mechanism to improve transparency and traceability and the Elliptic Curve Cryptography (ECC) cryptosystem to reduce computational delays. The suggested system assures data availability, secrecy, and integrity while maintaining minimal latency throughout the vehicle re-authentication process. Performance evaluations show the system’s scalability, with creating keys, encoding, decoding, and registration operations done rapidly. Simulation is performed using SUMO to handle vehicle mobility in IoV environment. The findings demonstrate the practicality of the proposed framework in vehicular networks, providing a reliable and trustworthy approach for IoV communication

Practical Implementation / Social Implications:

The practical application of this research can significantly improve the safety and reliability of autonomous vehicles and connected vehicle networks. By securing the handover process, it reduces the risk of hacking, data breaches, and unauthorized access, making connected vehicle systems safer for the public and contributing to the development of smart transportation infrastructures.

Future Research Plans:

Moving forward, we plan to focus on optimizing blockchain solutions for large-scale IoT and smart city applications, with a particular interest in improving consensus mechanisms and security protocols for real-time operations, such as autonomous driving and smart energy grids.

- Published in CSE NEWS, Departmental News, News, Research News

Mr Ratna Raju Publishes Research on UAV-Assisted Service Caching

Mr M Ratna Raju, Assistant Professor in the Department of Computer Science and Engineering, has achieved a remarkable milestone by publishing a research paper titled “Service caching and user association in cache-enabled multi-UAV assisted MEN for latency-sensitive applications” in the esteemed Q1 journal, Computers and Electrical Engineering, which boasts an impact factor of 4.0.

Mr M Ratna Raju, Assistant Professor in the Department of Computer Science and Engineering, has achieved a remarkable milestone by publishing a research paper titled “Service caching and user association in cache-enabled multi-UAV assisted MEN for latency-sensitive applications” in the esteemed Q1 journal, Computers and Electrical Engineering, which boasts an impact factor of 4.0.

The paper explores innovative strategies for improving service caching and user association in multi-unmanned aerial vehicle (UAV) networks, addressing challenges faced by latency-sensitive applications. Mr Raju’s research contributes significantly to the field of computer science, particularly in enhancing the efficiency of UAV-assisted networks.

This publication not only highlights Mr Raju’s dedication to cutting-edge research but also reinforces SRM University-AP’s commitment to fostering academic excellence and innovation in technology. As the demand for efficient communication networks continues to grow, findings from this study are poised to play a critical role in shaping the future of network architecture and UAV applications.

The academic community and students alike look forward to Mr Raju’s further contributions as he continues to lead impactful research initiatives at SRM University-AP.

Abstract of the Research

The evolution of 5G (Fifth Generation) and B5G (Beyond 5G) wireless networks and edge IoT (Internet of Things) devices generates an enormous volume of data. The growth of mobile applications, such as augmented reality, virtual reality, network gaming, and self-driving cars, has increased demand for computation-intensive and latency-critical applications. However, these applications require high computation power and low communication latency, which hinders the large-scale adoption of these technologies in IoT devices due to their inherent low computation and low energy capabilities.

MEC (mobile edge computing) is a prominent solution that improves the quality of service by offloading the services near the users. Besides, in emergencies where network failure exists due to natural calamities, UAVs (Unmanned Aerial Vehicles) can be positioned to reinstate the networking ability by serving as flying base stations and edge servers for mobile edge networks. This article explores computation service caching in a multi-unmanned aerial vehicle-assisted MEC system. The limited resources at the UAV node induce added problems of assigning the existing restricted edge resources to satisfy the user requests and the associate of users to utilise the finite resources effectively. To address the above-mentioned problems, we formulate the service caching and user association problem by placing the diversified latency-critical services to maximise the time utility with the deadline and resource constraints.

The problem is formulated as an integer linear programming (ILP) problem for service placement in mobile edge networks. An approximation algorithm based on the rounding technique is designed to solve the formulated ILP problem. Moreover, a genetic algorithm is designed to address the larger instance of the problem. Simulation results indicate that the proposed service placement schemes considerably enhance the cache hit ratio, load on the cloud and time utility performance compared with existing mechanisms.

Explanation of the Research in Layperson’s Terms

The rapid growth of 5G (Fifth Generation) and B5G (Beyond 5G) wireless networks, along with edge IoT (Internet of Things) devices, is creating a massive amount of data. As mobile applications like augmented reality (AR), virtual reality (VR), online gaming, and self-driving cars become more popular, there’s a greater need for fast, powerful computing. However, IoT devices typically have limited computing power and energy, making it hard to run these advanced applications. Mobile Edge Computing (MEC) offers a solution to this problem by offloading tasks to servers located closer to users, reducing delays and improving performance.

In cases of emergency where network failure occurs due to natural disasters, Unmanned Aerial Vehicles (UAVs) can be used to restore connectivity. UAVs can act as flying base stations and edge servers, helping mobile edge networks continue functioning. This research focuses on improving how computation services are cached and handled in a system that uses multiple UAVs to assist MEC. Since UAVs have limited resources, there’s a challenge in efficiently assigning these resources to meet user demands. The research proposes a solution by formulating this problem as an integer linear programming (ILP) problem, aiming to place services in a way that maximises performance while considering deadlines and resource limits. To solve this complex issue, we use two approaches. First, they apply an approximation algorithm based on a rounding technique to solve the ILP problem. Then, for larger problems, they use a genetic algorithm. Their simulation results show that these service placement strategies significantly improve metrics like cache hit ratio, load reduction on the cloud, and time utility, compared to existing methods.

Practical Implementation and the Social Implications Associated

The practical implementation of this research lies in enhancing the efficiency of real-time, computation-intensive applications like augmented reality (AR), virtual reality (VR), autonomous driving, and network gaming in mobile edge computing (MEC) environments, particularly in scenarios involving Unmanned Aerial Vehicles (UAVs). By optimizing how services are cached and distributed in a multi-UAV-assisted MEC system, the research enables faster data processing and lower latency, which is crucial for applications where even slight delays can cause major issues, such as in self-driving cars or real-time remote surgeries. In emergency situations, such as natural disasters, where ground-based networks may be damaged or overloaded, the deployment of UAVs as flying base stations and edge servers could restore network connectivity quickly and provide essential services. This research ensures that even under such constraints, services are efficiently distributed, enhancing responsiveness and reliability.

Social Implications:

Disaster Relief: UAVs with MEC support could be deployed during natural calamities to restore communication services, helping rescue teams coordinate better and saving lives.

Smart Cities and Autonomous Vehicles: The work contributes to making smart cities more responsive, with real-time data processing and seamless service delivery. Autonomous vehicles, for instance, would benefit from reduced latency, leading to safer and more efficient navigation.

Healthcare: Applications such as telemedicine and remote surgery could operate more effectively with lower latency, improving healthcare delivery in remote or disaster-affected regions.

Collaborations

1. Manoj Kumar Somesula and Banalaxmi Brahma from Dr. B. R. Ambedkar National Institute of Technology Jalandhar, Punjab 144008, India.

2. Mallikarjun Reddy Dorsala from Indian Institute of Information Technology Sri City, Chittoor, Andhra Pradesh 517646, India.

3. Sai Krishna Mothku from National Institute of Technology, Tiruchirappalli 620015, India

Future Research Plans

In future, we plan to consider the unique challenge of making caching decisions while accounting for the limited energy capacity of UAVs, mobility of UAVs, network resources, and service dependencies, which introduces new complexities in algorithm design minimising the overall service delay while adhering to constraints such as energy consumption, UAV mobility, and network resources. This would require the joint optimisation of service caching placement, UAV trajectory, UE-UAV association, and task offloading.

- Published in CSE NEWS, Departmental News, News, Research News