In a commendable stride toward advancing edge AI technology, Dr Swagata Samanta, Assistant Professor in the Department of Electronics and Communication Engineering along with B.Tech students Amrit Kumar Singha and Arnov Paul, have successfully filed and published a patent titled “A System for FPGA-based Acceleration of Support Vector Machine (SVM) Computations, and a Method Thereof” in Patent Office Journal.

In a commendable stride toward advancing edge AI technology, Dr Swagata Samanta, Assistant Professor in the Department of Electronics and Communication Engineering along with B.Tech students Amrit Kumar Singha and Arnov Paul, have successfully filed and published a patent titled “A System for FPGA-based Acceleration of Support Vector Machine (SVM) Computations, and a Method Thereof” in Patent Office Journal.

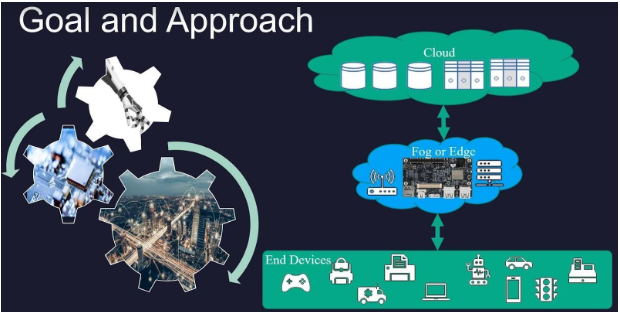

The patented system introduces a novel approach to speeding up machine learning algorithms specifically Support Vector Machines by implementing them on Field-Programmable Gate Array (FPGA)-based embedded systems. By harnessing the capabilities of Xilinx’s Vitis High-Level Synthesis (HLS), the team was able to develop a hardware-accelerated solution that dramatically enhances computational efficiency while simplifying the design process through C++-based abstraction.

Abstract:

By utilising the power and flexibility of FPGAs, the aim is to enhance the performance and efficiency of these compute-intensive tasks without delving into the intricate low-level hardware details. The approach involves implementing the fundamental concepts of SVM algorithms using the Vitis HLS design flow provided by Xilinx. Vitis HLS allows us to describe these algorithms at a higher level of abstraction using C++, enabling faster development and easier optimisation compared to traditional HDL- based designs.

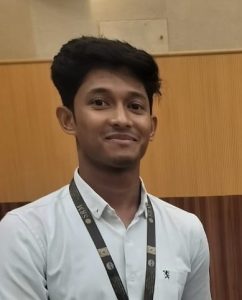

By leveraging the capabilities of Xilinx Zynq-based embedded systems, we can efficiently accelerate these algorithms and improve overall system performance. GDS2 is a standard file format used for representing integrated circuit layouts, playing a crucial role in the physical design and fabrication of FPGAs by capturing the geometric and connectivity information of components such as logic blocks, interconnects, and I/O pads.

Proper GDS2 layout design is essential for ensuring manufacturability, optimising performance, maximising area utilisation, and maintaining signal integrity within an FPGA, taking into account physical constraints and design rules imposed by the fabrication process to minimise signal propagation delays, reduce power consumption, optimise timing, achieve higher density, minimise wasted space, and employ proper routing and shielding techniques to minimise crosstalk, signal reflections, and other signal integrity issues. By combining the power of HLS using Vitis with the Cadence GDS2 layout design, this project aims to accelerate SVM algorithms on FPGA-based embedded systems.

The use of Vitis HLS simplifies the development process and enhances productivity, while the GDS2 layout design ensures manufacturability, performance optimisation, efficient area utilisation, and signal integrity. This work showcases the potential of using FPGAs for hardware acceleration of machine learning algorithms, opening up new possibilities for embedded systems in various domains such as computer vision, natural language processing, and data analytics.

Implementation and Impact:

This work advances machine learning on FPGAs by optimising SVM algorithms for speed via parallel processing, supporting multiple ML models, and using Vitis HLS for efficient hardware-software co-design, while reducing power consumption and enabling scalability through multi-FPGA or hybrid systems; we’ll test in real-world IoT, automotive, and medical applications, compress models with pruning and quantisation, transition to ASICs for mass production, and develop standardised interfaces and on-device learning to enhance privacy and adaptability.

These advancements could make AI more accessible for low-cost medical diagnostics or smart devices in underserved areas, reduce carbon footprints through energy efficiency, boost economic growth through job creation, and improve safety in self-driving cars and smart homes. However, ethical design is crucial to prevent bias or misuse and ensure equitable benefits across society.

Future Directions:

Building on this foundation, the team plans to expand their architecture to support additional ML models, deepen hardware-software co-design efforts, and implement on-device learning for adaptive, privacy-preserving intelligence. Long-term goals also include transitioning to custom ASIC implementations for mass production and developing standardised interfaces to enhance system interoperability.